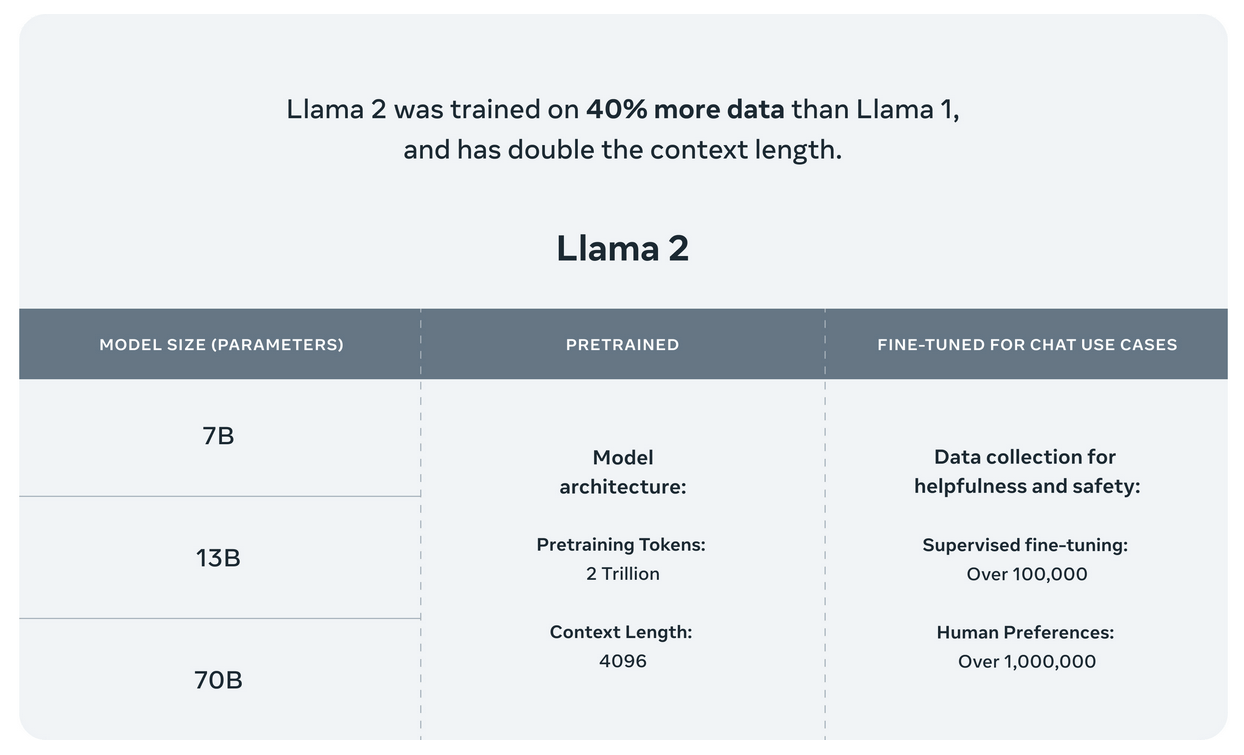

All three currently available Llama 2 model sizes 7B 13B 70B are trained on 2 trillion tokens and have double the context length of Llama 1 Llama 2 encompasses a series of. Llama 2 is a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 70 billion parameters This is the repository for the 7B pretrained model. I ran an unmodified llama-2-7b-chat 2x E5-2690v2 576GB DDR3 ECC RTX A4000 16GB Loaded in 1568 seconds used about 15GB of VRAM and 14GB of system memory above the. Llama 2 models are available in three parameter sizes 7B 13B and 70B and come in both. LLaMA-2-7B-32K is an open-source long context language model developed by Together fine-tuned from Metas original Llama-2 7B model This model represents our efforts to contribute to..

Llama 2 is a family of state-of-the-art open-access large language models released by Meta today and were excited to fully support the launch with comprehensive integration. Code Llama is a family of state-of-the-art open-access versions of Llama 2 specialized on code tasks and were excited to release integration in the Hugging Face ecosystem. This blog-post introduces the Direct Preference Optimization DPO method which is now available in the TRL library and shows how one can fine tune the recent Llama v2 7B-parameter. In this tutorial we will show you how anyone can build their own open-source ChatGPT without ever writing a single line of code Well use the LLaMA 2 base model fine tune. Llama 2 is being released with a very permissive community license and is available for commercial use The code pretrained models and fine-tuned models are all being released today..

Download Llama 2 encompasses a range of generative text models both pretrained and fine-tuned with sizes from 7 billion to 70 billion parameters Below you can find and download LLama 2. In Llama 2 the size of the context in terms of number of tokens has doubled from 2048 to 4096 Your prompt should be easy to understand and provide enough information for the model to generate. Install Visual Studio 2019 Build Tool To simplify things we will use a one-click installer for Text-Generation-WebUI the program used to load Llama 2 with GUI. The Llama2 7B model on huggingface meta-llamaLlama-2-7b has a pytorch pth file consolidated00pth that is 135GB in size The hugging face transformers compatible model meta. Then you can run the script..

Llama 2 encompasses a range of generative text models both pretrained and fine-tuned with sizes from 7 billion to 70 billion parameters Below you can find and download LLama 2. In Llama 2 the size of the context in terms of number of tokens has doubled from 2048 to 4096 Your prompt should be easy to understand and provide enough information for the model to generate. In this section we look at the tools available in the Hugging Face ecosystem to efficiently train Llama 2 on simple hardware and show how to fine-tune the 7B version of Llama 2 on a. Wairagala Wakabi Alexandr Wang Chris Wanstrath Patrick Wendell Josh Wolfe Eric Xing Tony Xu Daniel Castaño based on Llama 2 fine tuning. Believe in our open approach to todays AI companies that have given early feedback and are..

Comments